Intelligent agents are considered by many to be the ultimate goal of AI. The classic book by Stuart Russell and Peter Norvig, Artificial Intelligence: A Modern Approach (Prentice Hall, 1995), defines the field of AI research as “the study and design of rational agents.”

This article provides an overview of AI agents, defining them as entities that perceive their environment and act upon it using tools. It emphasizes that the capabilities of an agent are determined by its environment, the actions it can perform, and the tools it has access to. The article discusses various types of tools, including those for knowledge augmentation, capability extension, and write actions. It also delves into the planning process, highlighting the importance of decoupling planning from execution and the role of reflection and error correction. The article explores different planning techniques, including function calling and hierarchical planning. It also discusses the challenges of tool selection and the importance of evaluating agents for planning failures, tool failures, and efficiency. The article concludes by emphasizing the significance of agents and their potential impact, while also acknowledging the need for further research and development in this field.Chip Huyen's article on AI Agent is exceptionally well-written. It explains the concept clearly and accessibly, and also provides detailed, relevant examples. It's a bit lengthy, but trust me, it's worth the read.

An agent is like an assistant. You give it a task, such as washing the car, and it figures out the most effective way to accomplish it.

Here's how it might work:

- Plan: The agent researches the nearest car washes, their prices, and services.

- Action: The agent drives to the chosen car, ensures the car is washed, and then drives it home.

- Result: The task is completed.

In an AI agent, AI is the brain that processes the task, plans a sequence of actions to achieve this task, and determines whether the task has been accomplished.

Compared to non-agent use cases, agents typically require more powerful models for two reasons:

- Compound mistakes: an agent often needs to perform multiple steps to accomplish a task, and the overall accuracy decreases as the number of steps increases. If the model’s accuracy is 95% per step, over 10 steps, the accuracy will drop to 60%, and over 100 steps, the accuracy will be only 0.6%.

- Higher stakes: with access to tools, agents are capable of performing more impactful tasks, but any failure could have more severe consequences.

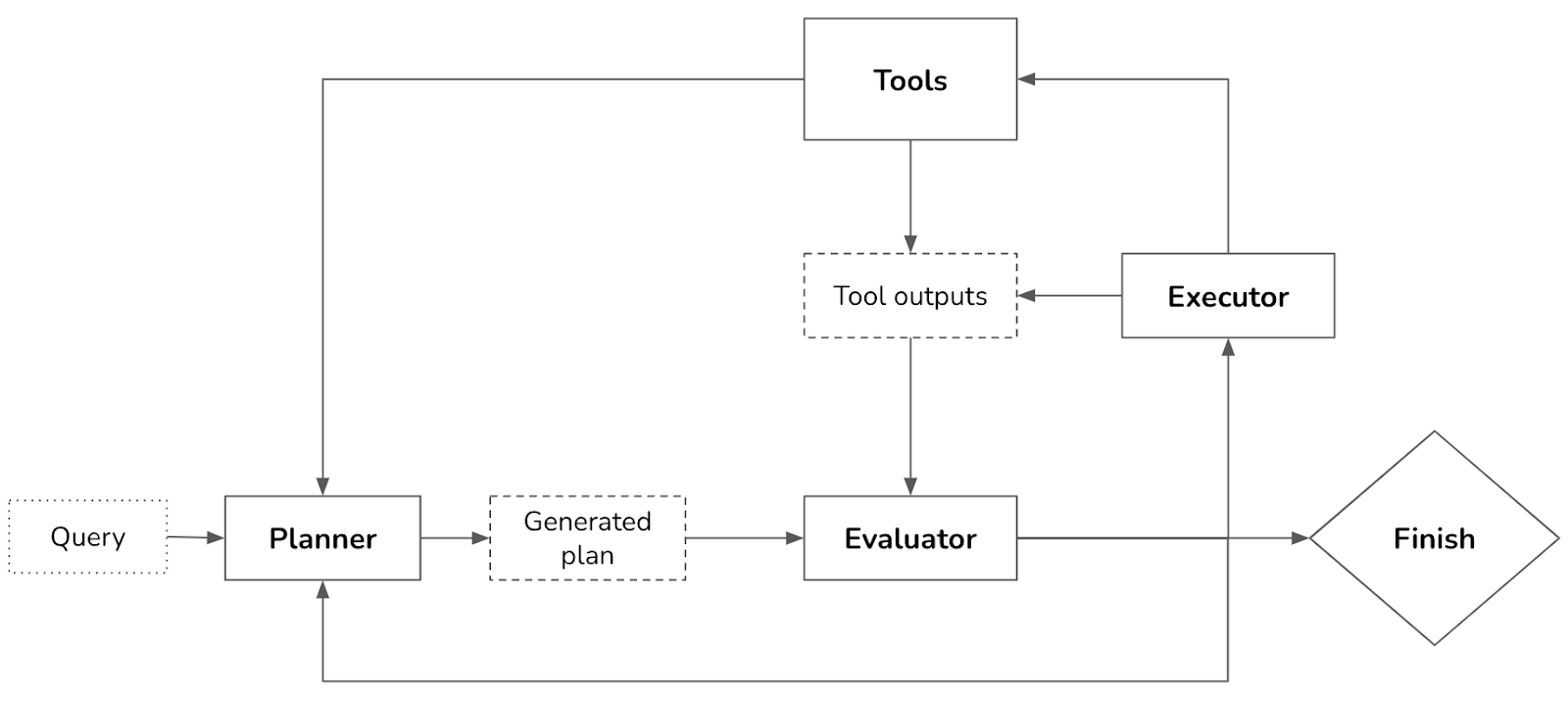

Complex tasks require planning. The output of the planning process is a plan, which is a roadmap outlining the steps needed to accomplish a task. Effective planning typically requires the model to understand the task, consider different options to achieve this task, and choose the most promising one.